Beyond the "Busy" Bot: Why Activity Isn’t Intelligence in AI Agents

- Mark Kendall

- 8 hours ago

- 2 min read

This premise hits on the most critical challenge in the current "Agentic Era." It’s the difference between a busy office and a productive one.

Here is a draft for a Wix-style blog article, optimized for readability with a professional yet forward-thinking tone.

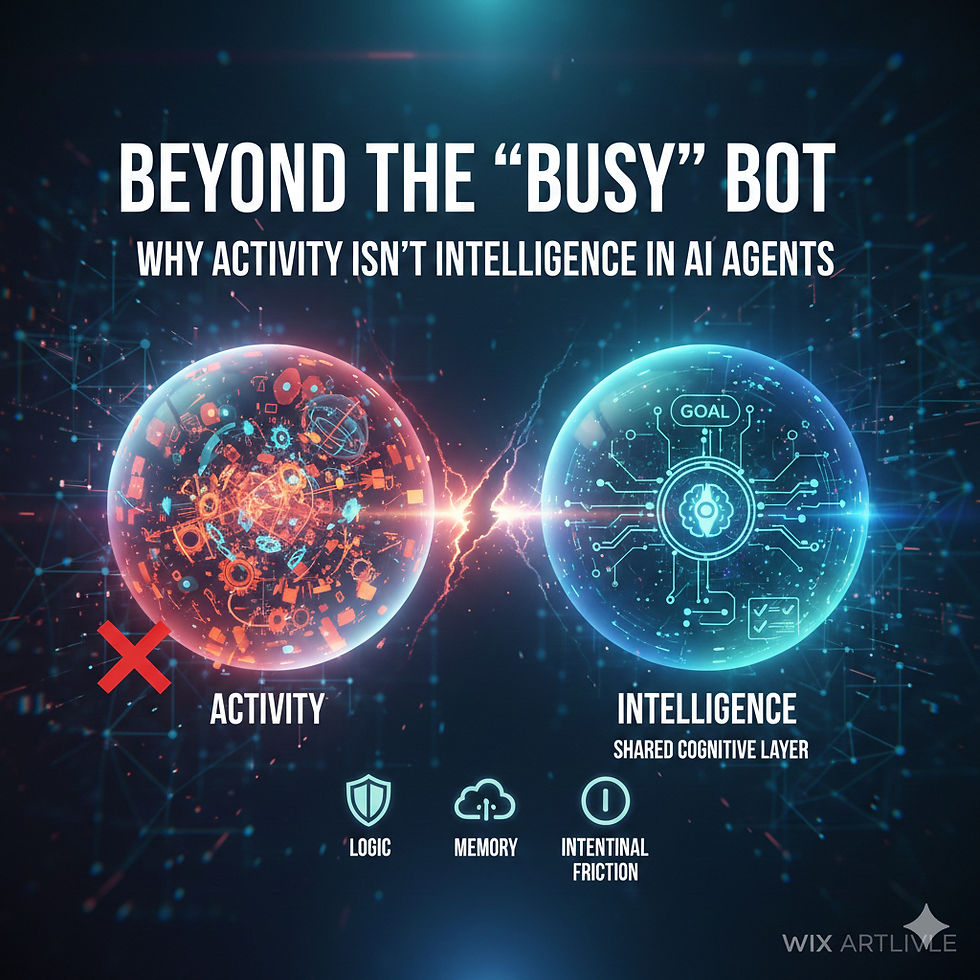

Beyond the "Busy" Bot: Why Activity Isn’t Intelligence in AI Agents

In the rush to automate everything, we’ve entered the era of the AI Agent. Unlike simple chatbots, these agents can browse the web, write code, and execute multi-step workflows. They are, by all accounts, incredibly "active."

But there is a dangerous trap emerging for businesses: confusing activity with intelligence. Just because an agent is performing a thousand tasks a second doesn’t mean it understands why it’s doing them, or if it should be doing them at all. Without a robust foundation, we risk building systems that are "efficiently lost."

The Illusion of Progress

We often measure AI success by throughput—how many emails were processed, how many tickets were closed, or how much data was scraped. This is Activity.

Intelligence, however, is the ability to navigate ambiguity and align actions with a broader goal. An agent without a cognitive layer is like a high-speed car without a GPS or a steering wheel: it moves fast, but it rarely ends up where you actually need it to be.

The Risks of "Blind Automation"

* Context Collapse: Agents may solve a micro-task while inadvertently breaking a macro-strategy.

* The Feedback Loop of Error: Without understanding, an agent can automate a mistake at a scale no human ever could.

* Resource Exhaustion: High activity without high intent leads to "compute waste"—running expensive processes that yield no real business value.

The Missing Link: A Shared Cognitive Layer

To move from "active" agents to "intelligent" ones, we need to implement a shared cognitive layer. This isn’t just about better prompts; it’s about architecture.

1. Clear Decision Frameworks

Agents need "Guardrails of Logic." Instead of just giving an agent a task, we must provide it with a hierarchy of priorities.

> Example: "Prioritize customer retention over speed of resolution."

>

2. Contextual Memory

Intelligence requires a "shared history." A cognitive layer allows multiple agents to tap into a single source of truth—understanding the brand voice, previous customer interactions, and long-term company goals.

3. Intentional Friction

True intelligence involves knowing when not to act. A sophisticated agentic system should include "checkpoints" where the AI pauses to validate its path against the desired outcome.

The Path Forward: Building for Understanding

As we integrate AI agents into our workflows, our focus must shift from execution to alignment. The goal isn’t to have the most active agents; it’s to have the most discerning ones.

Before you deploy your next automation, ask yourself:

* Does this agent know what "success" looks like?

* Does it have the framework to say "no" to a task that contradicts our values?

Automation without understanding is just noise. Real value lies in the signal.

Would you like me to generate a high-quality header image to go along with this article?

Comments